Recent advancements in conversational AI have accelerated the development of agents capable of engaging in increasingly complex and human-like interactions. Yet, a fundamental challenge persists: domain alignment; the ability of agents to operate with organization-specific intelligence while preserving accuracy, security, and control. Most conventional chatbot platforms rely solely on large models, which can introduce hallucinations, lose control over brand voice, and struggle to leverage proprietary data effectively.

To address this, DaveAI introduces Microbot, a low-code platform designed to enable enterprises to design, build, and deploy domain-specific conversational AI agents with speed and precision. At its core is a multi-layered language model architecture that combines custom-trained Enterprise SLMs with powerful LLMs in a nested configuration delivering both deep contextual understanding and high scalability. This hybrid architecture ensures that conversations remain on-brand, compliant, and contextually rich, while providing enterprises with full control over knowledge pipelines and deployment workflows.

Microbot is a core part of DaveAI’s GRYD agentic architecture, designed to deliver secure, domain-specific conversational intelligence.

Core Components of Microbot

- Context Profiling: Maintains persistent conversational intelligence by storing both Agent Persona (voice, tone, role, and domain expertise) and User Context (facts such as name, preferences, past interactions, and intent patterns). This enables Microbot agents to deliver personalized, consistent, and context-aware conversations across sessions without losing brand alignment or conversational continuity. In the Microbot platform, the memory retention period is fully configurable to suit specific project or compliance needs.

- Interaction History: Logs and organizes all user-agent conversations with precise timestamps, preserving structured details such as query type, summary, context, participants, and chronological sequence for seamless continuity and context recall in the Microbot Platform.

- Semantic Links – Organizes domain knowledge from documents, FAQs, and other sources into structured subject–object relationships. Entries are classified by type and variables, enabling the platform to retrieve precise, context-aware information during conversations.

- Flows – Stores structured workflows that guide task execution, available in two modes: Manual Agents in which functions are triggered explicitly, and AI Agents, where LLMs dynamically determine and execute the next steps. Designed to ensure both precision and adaptability in conversation handling.

- VecSearch: Tailored exclusively to enterprise-approved data, ensuring no or minimal reliance on non-approved external sources. Offers full deployment flexibility; whether On-Premise, on the enterprise’s preferred cloud provider (AWS, Google, Azure, etc.), or migrated seamlessly between providers. Enables internal-only usage for highly sensitive data with network-restricted access. Incorporates Reinforcement Learning with Human Feedback (RLHF) to fine-tune AI agents through human-guided rewards and preferences. This builds a robust reward model that accelerates training and improves goal alignment.

- Information Grid: Integrates RAG (Retrieval Augmented Generation) and GAG (Graph Augmented Generation) to retrieve precise answers from enterprise-fed documents while ensuring contextually accurate responses. Initiates with Start Intent logic to determine what action to take, when to execute it, and which AI model agent to invoke. Coordinates the activities of multiple agents and managers, streamlining decision-making and execution for complex enterprise workflows.

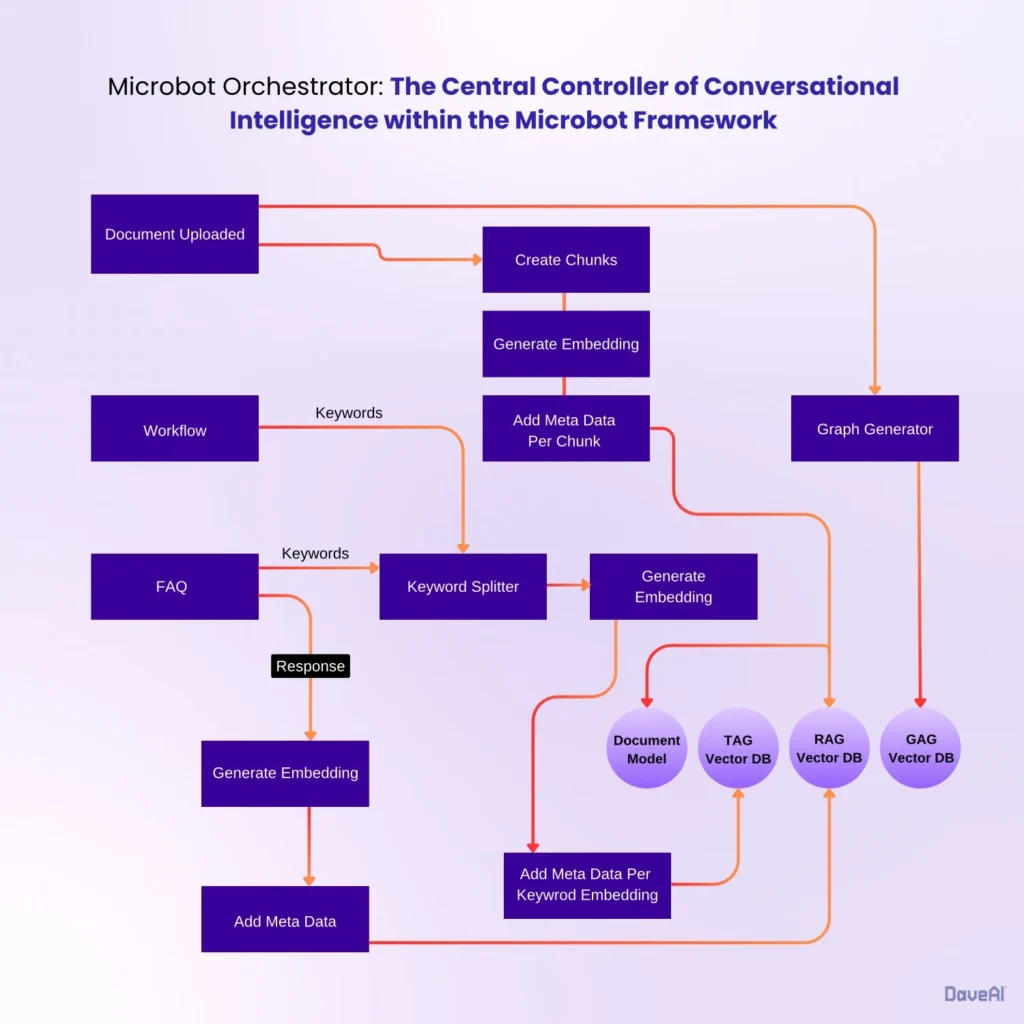

Microbot Orchestrator

Microbot Orchestrator acts as the central controller for all conversational intelligence within the Microbot framework. It manages query enrichment, information retrieval, and workflow activation. When a customer query is received, the orchestrator first performs a multi-step augmentation process:

- Reformat – Clean and restructure the query for consistency.

- Identify Missing Fields – Detect incomplete or ambiguous elements.

- Augment Sentence – Enrich the query with relevant contextual data.

- Add Nouns & Missing Information – Expand with key entities for precision.

Once the query is fully enriched, it runs through the vector search algorithm to find the most relevant data. The orchestrator then classifies the action into three categories: RAG (pure document retrieval), GAG (graphical representation of knowledge), or TAG (workflow/task execution).

For document-based queries, the orchestrator triggers the RAG/GAG pipeline along with all six Microbot components to verify if the available data contains sufficient information to generate an accurate response to the query. For task-based queries, TAG (Task Augmented generation) checks if a related workflow is already active; if so, it confirms with the customer (“Do you want to proceed with X or Y?”) before continuing. This layered approach ensures every query is accurately classified and executed.

In Microbot, RAG uses enterprise-fed documents to generate accurate, context-based answers. TAG incorporates additional information that also includes multimedia like videos, images, slideshows, etc. By comparing outputs from RAG and TAG, Microbot pinpoints additional information provided by TAG and integrates it with the common response resulting in a detailed explanation of the query. This allows Microbot to deliver multimodal answers to all types of queries- single as well as compound.

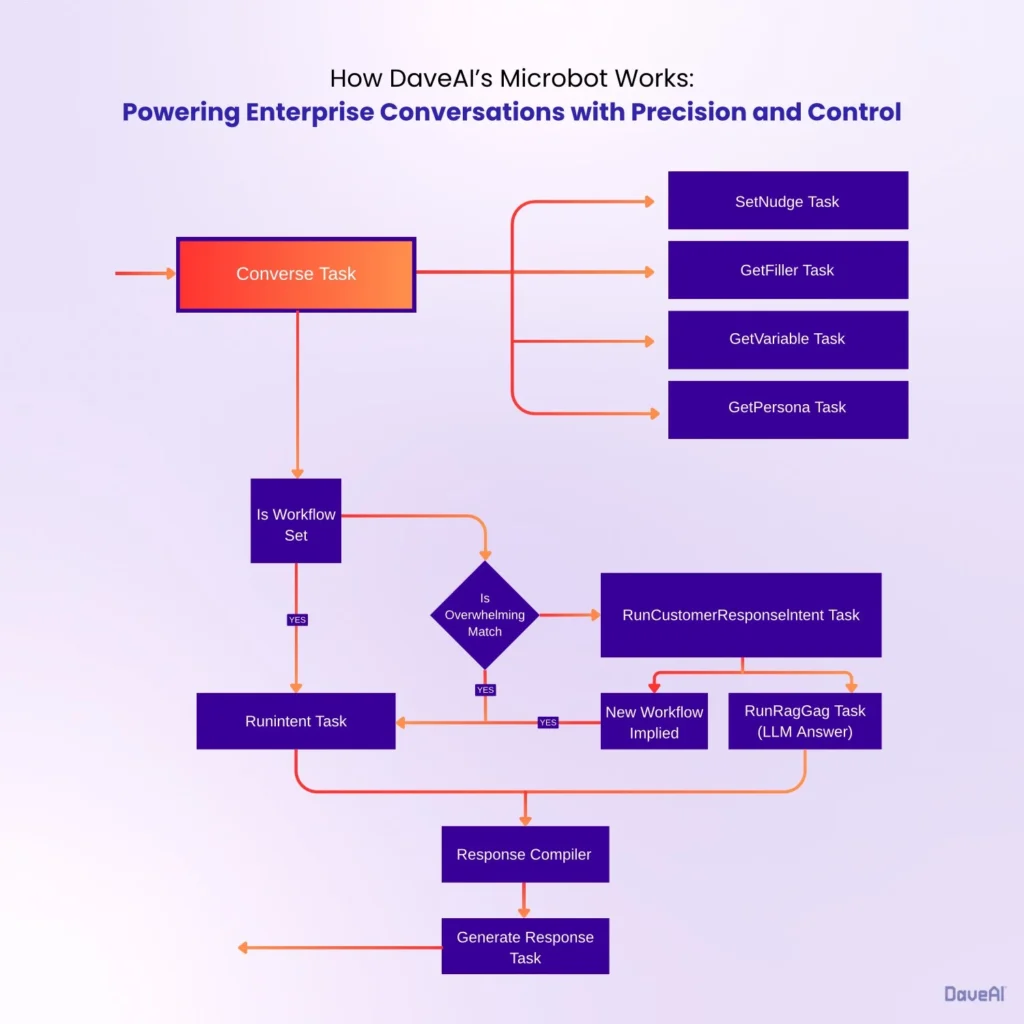

Conversation Pipeline

On receiving user input, the system first autonomously reformats the query, detects and fills missing fields, enriches it with relevant entities and context, and then classifies it for the correct action path (RAG, GAG, or TAG).

The orchestrator then retrieves relevant data entries from all six Microbot components using Microbot data relevance search. By default, Microbot optimizes for 10 high-quality, context-rich results. This value can be tuned as needed ensuring both speed and precision.

Finally, the retrieved data is tagged for context, enabling more accurate, workflow-aware responses.

Platform Implementation

Microbot is deployed as a modular conversational AI framework capable of integrating with multiple Large Language Models (LLMs). This list includes GPT-3.5 Turbo, GPT-4.0, Google Bard (LaMDA), Meta LLaMA 3, and any other REST API–enabled generative AI platforms. Microbot supports conversations powered by LLMs hosted on platforms like Azure and OpenAI, or deployed securely on-premise. This model-agnostic architecture allows businesses to select and switch between underlying LLMs without disrupting the conversational flow.

Users can interact through a responsive Voice/Text Chat/WhatsApp interface, which continuously leverages the agent’s memory components to deliver context-aware, highly personalized responses. Conversations are enriched with persistent session context, enabling the system to recall previous interactions, maintain user profiles, and adapt dynamically to evolving conversation states.

For organizations seeking immersive engagement, Microbot supports an optional avatar add-on. The avatar is powered by real-time rendering and lip-sync capabilities transforming standard text interactions into visually engaging, human-like conversations.

Microbot Use Cases

- Customer Support Bots

- Automates responses to common customer queries across multiple channels.

- Uses contextual memory to provide consistent answers and recall past interactions.

- Reduces support costs and improves first-response resolution rates.

- Lead Qualification Agents

- Engages potential customers in natural, human-like conversations.

- Collects and validates essential lead information through guided questioning.

- Automatically scores and prioritizes leads based on predefined criteria.

- Internal Workflow Automation

- Assists employees with routine operational tasks such as approvals, status checks, and data entry.

- Integrates with enterprise systems to pull or update information in real time.

- Streamlines repetitive processes to save time and reduce manual errors.

- Knowledge Assistants

- Acts as an always-available internal knowledge resource for teams.

- Retrieves accurate information from structured and unstructured enterprise data sources.

- Adapts responses based on the user’s role, past queries, and organizational context.

Evaluation of the Platfom

1. Fact-checking: This is one of the validations that the Microbot platform goes through. It starts by cleaning up both the AI’s answer and the correct answer so they can be compared fairly. Then, it runs several checks like looking at spelling and word differences, how many words match, and how similar the sentences are in meaning. If these scores fall below set thresholds, the system goes deeper, breaking both answers into smaller “claims” and validating each one individually using an AI model. This way, it’s not just catching similar-sounding answers, but verifying that each fact is correct. Using this multi-layered method, Microbot consistently delivers semantic similarity score of 96% and keyword match score of 67%. This shows the platform’s ability to handle complex, multi-step queries with accuracy and clarity.

2. Performance Latency: One of the other validations that Microbot undergoes is its performance benchmarking across both closed and open-source models. For LLMs such as GPT, the platform leverages API-based inference.While open-source models and custom-hosted LLMs are tested using inferencing engines like VLLM and TGI. Benchmarking metrics include LLM preference evaluations and TGI-specific performance tests, with a primary focus on latency, measured as the time in milliseconds to retrieve the first token for a given query. Across all LLM models, Microbot ensures that the response to a user query takes less than 500 ms or half a second, providing both fast and accurate replies.

FAQs

1. What is DaveAI’s Microbot and how is it different from a regular chatbot?

Microbot is a low-code, enterprise-grade conversational AI platform. It combines custom-trained Small Language Models (SLMs) with Large Language Models (LLMs) in a hybrid architecture. Unlike generic chatbots, it offers domain-specific intelligence, strict data security, and brand-aligned conversations powered by proprietary enterprise data, not just public internet knowledge.

2. How does Microbot ensure security and compliance for sensitive enterprise data?

All AI interactions are powered by enterprise-approved data sources, with full flexibility for on-premise or preferred cloud deployments (AWS, Azure, Google Cloud, etc.). It uses human-in-the-loop reinforcement learning (RLHF) to maintain compliance and quality.

3. What kind of AI architecture does Microbot use?

The platform uses a multi-layered architecture that nests Enterprise SLMs within LLMs. This approach ensures deep contextual understanding while allowing enterprises to fully control their knowledge pipelines, deployment workflows, and compliance requirements.

4. Can Microbot work with multiple AI models?

Yes. It is model-agnostic. It integrates seamlessly with GPT-3.5, GPT-4, Google Bard (LaMDA), Meta LLaMA 3, and other REST API–enabled AI platforms. Enterprises can switch between models without disrupting conversations.

5. What are the main use cases for Microbot?

Key applications include customer support bots, lead qualification agents, internal workflow automation assistants, and internal knowledge assistants.Each leverages contextual memory, structured workflows, and enterprise-specific data to deliver accurate, personalized responses.