AI systems are evolving rapidly, now capable of handling complex tasks, switching between tools, and managing stateful interactions. However, the lack of structured context sharing across AI models and external services often leads to fragmented workflows. Enter the Model Context Protocol (MCP)—a standardized protocol designed to manage context across tools and models in a consistent, secure manner.

Introduced by Anthropic and supported by OpenAI, DeepMind, and Microsoft, MCP uses a JSON-RPC 2.0 foundation to structure messages, allowing AI models to understand, maintain, and share context across multi-tool environments.

In this guide, we will explore:

- What MCP is and how it functions

- Why it’s crucial for modern AI applications

- Top 10 use cases with real-world examples

- Implementation strategies and best practices

- Challenges, tools, and the future of MCP

Let’s dive in and understand how MCP is redefining context management in AI.

What is Model Context Protocol?

The Model Context Protocol (MCP) is an open standard protocol designed to manage and preserve context across multiple interactions, tools, and AI models. It ensures consistent communication between language models (like Claude, GPT-4, or Gemini) and the tools they use—be it a CRM, file system, API, or external data source.

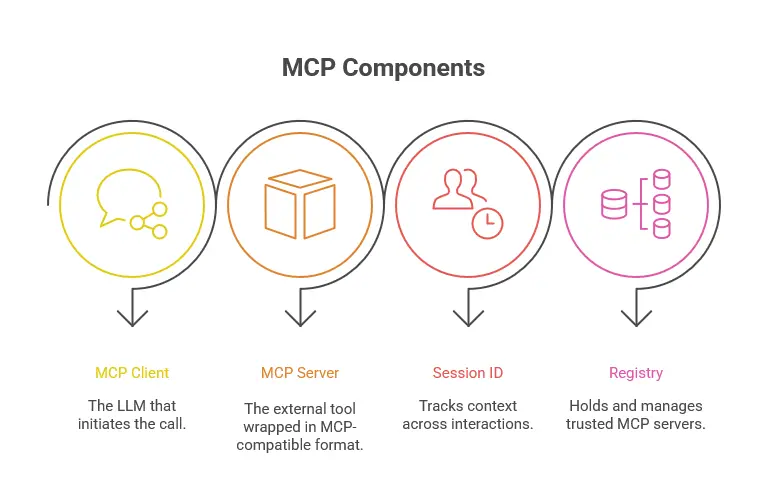

Key Components

- MCP Client: The LLM that initiates the call.

- MCP Server: The external tool wrapped in MCP-compatible format.

- Session ID: Tracks context across interactions.

- Registry: Holds and manages trusted MCP servers.

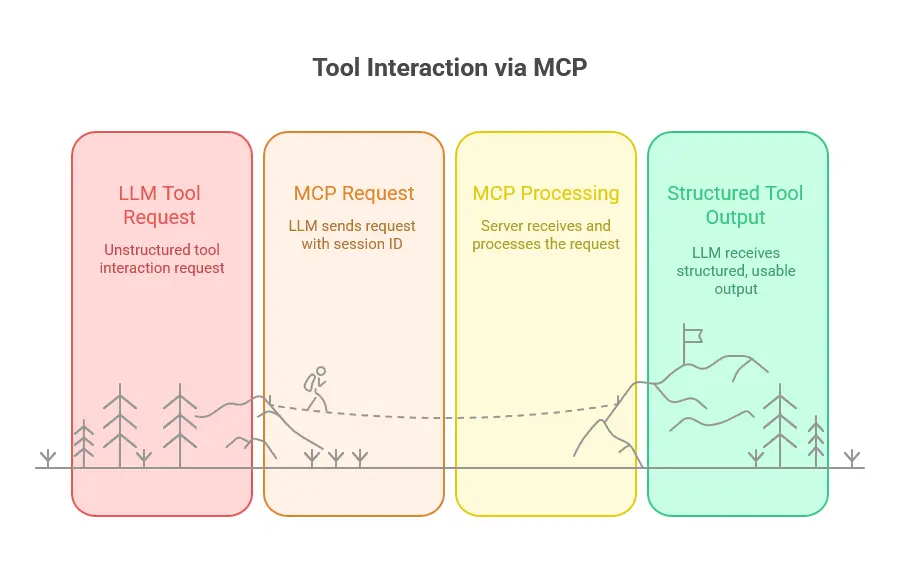

How It Works

- An LLM requests to interact with a tool via an MCP message.

- The message includes a session ID and contextual metadata.

- The MCP server receives and processes the request.

- The server sends back structured output, which the LLM can understand.

Unlike traditional pipelines, MCP removes the need for custom code per tool. It’s plug-and-play—if a server exists, it can be accessed by any compliant client.

Benefits

- Context Preservation: Ensures information continuity across sessions.

- Tool Interoperability: Connects models with multiple services seamlessly.

- Secure & Structured: Includes permissioning, scopes, and governance.

- Developer Efficiency: One integration gives access to many tools.

MCP is currently being adopted by developers, startups, and large enterprises alike as the backbone of intelligent AI systems.

Why Model Context Protocol Matters

1. AI Needs Context

Without context, AI models forget previous conversations, user preferences, or workflows. This leads to broken experiences. MCP solves this.

2. Cross-Tool Interoperability

MCP enables LLMs to share context across a range of tools—from databases and APIs to browsers and internal systems.

3. Unified Architecture

With MCP, you no longer need separate connectors for each tool. One protocol standardizes communication.

4. Secure Information Flow

MCP servers enforce access control, encryption, and structured permissions.

5. Developer Productivity

Reusable code and universal standards reduce time-to-deploy from weeks to hours.

6. Real-World Adoption

Companies like Microsoft have begun integrating MCP into Windows AI Foundry. Anthropic’s Claude models support it natively.

Top 10 Use Cases of Model Context Protocol

Use Case 1: Multi-Turn Conversational AI

Problem: In traditional chatbots, context gets lost after a few turns, leading to repetition and poor UX.

MCP Solution: Maintains long-term memory across sessions.

Example: Customer support bots that remember order history, preferences, and prior issues.

Outcome: Reduces customer effort, boosts satisfaction.

Use Case 2: Cross-Platform AI Applications

Problem: AI behavior is inconsistent across web, mobile, and voice interfaces.

MCP Solution: Context travels with the user across platforms.

Example: A user books a flight on the app, continues managing it via voice assistant.

Outcome: Cohesive experiences across devices.

Use Case 3: AI-Powered Content Creation Workflows

Problem: Context is fragmented across teams and tools.

MCP Solution: Maintains project-level context across writing, design, and review tools.

Example: An AI copywriter continues editing a blog using notes added in Figma via MCP.

Outcome: Smoother collaboration and reduced errors.

Use Case 4: Enterprise AI Integration

Problem: ERPs, CRMs, and databases are siloed.

MCP Solution: Connects these systems via standard protocol.

Example: Sales AI pulls data from both Salesforce and Oracle ERP using MCP.

Outcome: Unified view of customers, streamlined operations.

Use Case 5: Personalized Learning Systems

Problem: Fragmented learning records lead to repeated lessons.

MCP Solution: Tracks learning state, goals, and performance.

Example: AI tutor adjusts lessons based on past interactions across apps.

Outcome: Personalized education at scale.

Use Case 6: Healthcare AI Applications

Problem: Context switching between patient systems is risky.

MCP Solution: Connects EHRs, symptom checkers, and diagnostic tools.

Example: AI assistant suggests treatment using real-time vitals + history.

Outcome: Safer, faster clinical decisions.

Use Case 7: Financial Services AI

Problem: Risk analysis requires multi-model inputs (credit, fraud, behavior).

MCP Solution: Aggregates inputs from multiple data sources via MCP.

Example: AI banker reviews credit scores, transactions, fraud alerts in one session.

Outcome: Smarter financial advice and approvals.

Use Case 8: IoT and Edge AI Integration

Problem: Context lost between edge and cloud systems.

MCP Solution: Context syncs between local sensors and central AI models.

Example: Factory AI tracks machine wear across edge and cloud.

Outcome: Predictive maintenance, less downtime.

Use Case 9: Gaming and Interactive Media

Problem: Games struggle to maintain story context across levels.

MCP Solution: Stores player history and choices across game states.

Example: AI narrator adapts based on past decisions.

Outcome: Dynamic storytelling and immersion.

Use Case 10: Research and Development

Problem: Experimental context is hard to replicate.

MCP Solution: Tracks inputs, tools, outputs across experiments.

Example: Drug discovery workflow tracked via MCP.

Outcome: Better reproducibility and insights.

MCP Implementation Best Practices

- Plan Your Architecture: Map tools and their required permissions.

- Use the Official SDKs: Anthropic provides Python, JS, and Go libraries.

- Define Clear Session Policies: When to start, store, expire sessions.

- Optimize for Performance: Use caching, local MCP servers for speed.

- Monitor Tool Usage: Log calls, durations, errors.

- Secure by Design: Validate inputs, scope permissions, encrypt data.

- Avoid Bloat: Keep context relevant and manageable.

Tools and Frameworks for MCP

- Reference Servers: Filesystem, GitHub, Search, Weather

- SDKs: Python, Go, Rust, JavaScript

- Platforms: Claude Desktop, Windows AI Foundry

- Tutorials: modelcontextprotocol.io/examples

- Community: GitHub, Reddit, Discord, Dev.to

Challenges and Limitations

The Model Context Protocol (MCP), while powerful, comes with its own set of challenges and limitations. Security remains a key concern, as enterprises require granular permission controls and comprehensive audit trails to ensure data integrity and compliance. On the performance front, relying on remote servers can introduce latency, especially in time-sensitive applications. Additionally, tool coverage is still evolving—not all existing tools and platforms have MCP wrappers or integrations, limiting its immediate applicability across the tech stack. Lastly, the adoption curve presents a hurdle; teams accustomed to legacy workflows must undergo a mindset shift and adapt to the MCP framework, which may require retraining and process reengineering.

Future of MCP

The future of Model Context Protocol (MCP) is poised to unlock even greater capabilities across enterprise AI ecosystems. Semantic context ranking will enable more intelligent and prioritized context injection, ensuring that LLMs receive the most relevant information at the right time. Toolchain orchestration is set to evolve, allowing seamless coordination between multiple tools, agents, and workflows—further boosting productivity and automation. As IoT support expands, MCP will be able to ingest and respond to real-time data streams from connected devices, opening doors to smarter environments. Standardization across LLMs is also on the horizon, enabling interoperability and consistent performance regardless of the underlying model provider. Finally, enterprise registries will play a key role by offering centralized repositories of vetted tools, datasets, and prompts—ensuring secure, scalable, and auditable deployments across organizations.

Getting Started

- Install an SDK: pip install modelcontextprotocol

- Register your first server (e.g., filesystem-server)

- Add tool config to your LLM client (Claude, GPT, etc.)

- Test interactions and observe logs

- Expand with custom tools

Conclusion

Model Context Protocol is not just a new integration standard—it’s the foundation of interoperable, intelligent AI ecosystems. From customer support to healthcare, IoT to gaming, the ability to share structured context is redefining what’s possible with AI.

Start with a simple use case. Explore the official tools. Join the community. And start building intelligent, connected, context-aware AI systems.

FAQs

Q1: Is Model Context Protocol open source?

Yes, MCP is open source and community-driven, with active contributions on GitHub.

Q2: Which LLMs support MCP?

Anthropic Claude, OpenAI GPT (via plugins), and Google Gemini (via bridge SDKs) support MCP clients.

Q3: Can I build my own MCP server?

Absolutely! You can use Python, Go, Rust or JavaScript to build a compliant server.

Q4: How is context stored securely?

Contexts are scoped, encrypted, and often stored locally or via enterprise-regulated cloud.

Q5: Is MCP production-ready?

Yes. Microsoft, Anthropic, and others already use it in production workflows.