You may have heard about AI that can talk to you via text or an AI that can generate images. But imagine an engagement platform that can converse with users by seamlessly using multiple data types, including but not limited to image, text, speech, and numerical data. Multimodal AI does exactly that. It has the power to naturally converse with humans by consolidating these data modalities using multiple intelligence processing algorithms to achieve enhanced performance.

Because of its capabilities, multimodal AI easily outperforms traditional unimodal AI systems that can only function on a single set of data. This has led multimodal AI to find widespread usage in many business domains. Some of the primary areas where it finds use include customer experience, social media analysis, and training and development of employees.

Also Read: What is Multimodal AI?

The multimodal AI scene is rapidly expanding. The global market for multimodal Al market is projected to grow from USD 1.0 billion in 2023 to USD 4.5 billion by 2028, at a CAGR of 35.0% during the forecast period. This has led to tech giants investing in the innovative platform. The most popular ones by far include:

- Google Gemini

- GPT-4V

- Inworld AI

- Meta ImageBind

- Runway Gen-2

Benefits of Multimodal AI for Businesses

Multimodal AI has been adopted by many businesses in a variety of innovative and creative ways. Thus, compiling all its individual benefits based on usage variants would create an inexhaustible list. However, there are three primary benefits and usages that ring true for all forms of usage. They are as follows:

- Enhanced Comprehension: Multimodal AI stands out for its remarkable capacity to process a variety of data modalities, including text, images, and speech, all at once. This integrated approach allows the model to discern nuanced patterns and intricate relationships that might elude detection when considering each modality independently. By simultaneously examining diverse sources of information, multimodal AI achieves a more comprehensive understanding of complex datasets

- Improved decision-making: Multimodal AI steps up decision-making by combining insights from text, images, and speech. This all-in-one approach helps grasp complex situations better, capturing subtle details for a more accurate understanding. It’s like having a comprehensive toolkit for decision-makers, allowing them to make well-informed choices across various sectors, from business strategy to healthcare and beyond.

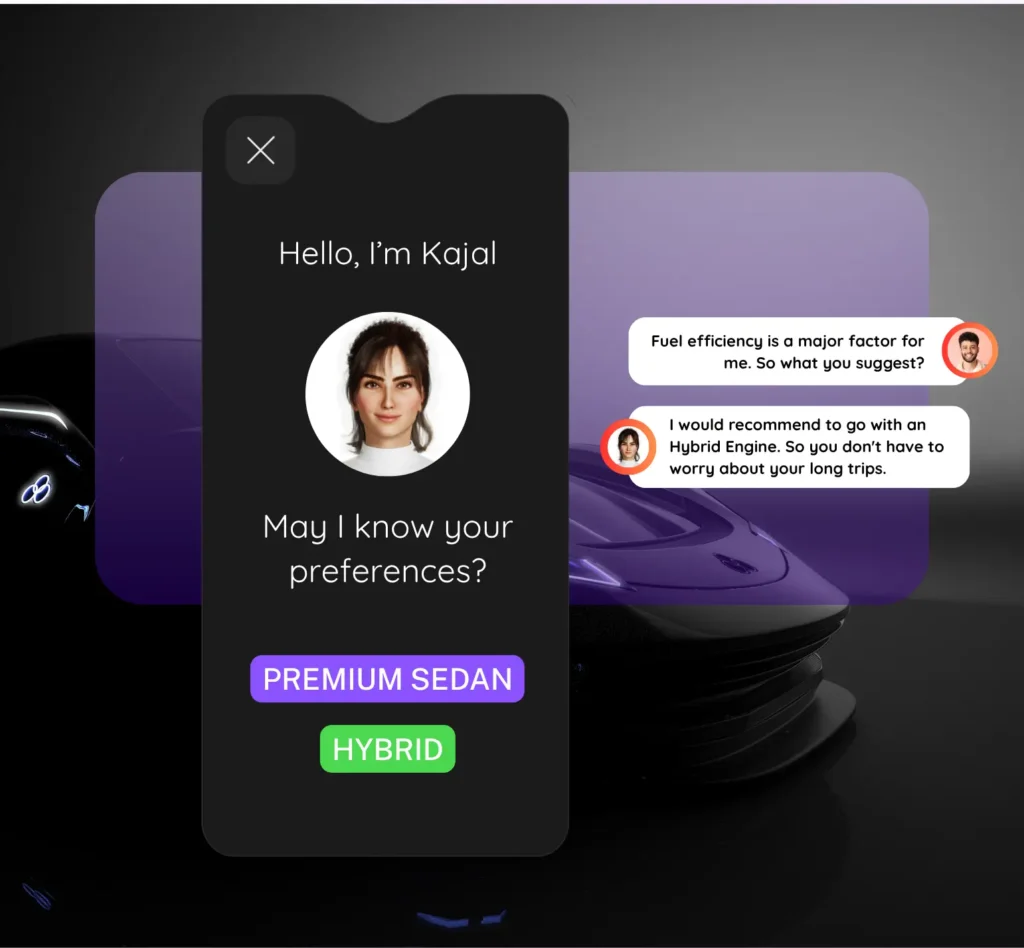

- Personalized User Experiences: Multimodal AI takes personalization to the next level by blending text, images, and speech data. This means a more tailored user experience, where the system understands individual preferences for things like e-commerce suggestions or content recommendations. It’s like having a personal assistant that gets you, making interactions more engaging and strengthening the bond between users and businesses.

Free Download: AI Readiness Checklist

Top 5 Multimodal AI Models In 2024

A. Google Gemini

Google Gemini stands out as a versatile tool. It’s a natively multimodal Language Model (LLM) that can handle text, images, video, code, and audio effortlessly. It has three versions—Gemini Ultra, Gemini Pro, and Gemini Nano—each tailored to specific user needs. The top performing one is Gemini Ultra, outperforming GPT-4 on 30 out of 32 benchmarks, as highlighted by Demis Hassabis, CEO, and co-founder of Google DeepMind.

Applications in Business

For businesses diving deep into research, Gemini could be a game-changer. According to Google, there are three flavours of Gemini: First up, Gemini Nano, built to fuel generative A.I. apps on your mobile devices. Then, there’s Gemini Pro, crafted for large-scale deployment. And finally, there’s Gemini Ultra, the heavyweight designed for tackling super complex tasks that demand extra computing power and reasoning.

Key Features and Advantages

- Multimodality allows Gemini to process various data types like text, images, audio, video, and even code, seamlessly and all at once.

- Gemini goes beyond simply recalling factual data and mimicking it. It can truly think, analyze, and answer complex questions.

- It can not only generate code but can debug and understand it.

- The advanced information retrieval feature allows it to understand human context, allowing it to provide info that goes beyond keywords.

- It can analyze info from different sources to verify the information it is providing to a user.

- It can provide personalized search results based on prior interactions.

- Its creative and expressive capabilities include art and music generation, multimodal storytelling, and language translation.

- It is resource-efficient and hence, very scalable.

- Its continuous learning abilities help it keep growing with each interaction.

B. GPT-4V

ChatGPT, now enhanced with GPT-4 and integrated vision capabilities (GPT-4V), has garnered considerable attention. With an impressive user base of 100 million weekly active users as of November 2023, this development marks a significant stride. Noteworthy is ChatGPT’s proficiency beyond mere textual interactions; it adeptly processes text, images, and voice prompts. Notably, responses are articulated in up to five distinct AI-generated voices. The GPT-4V variant distinguishes itself as a substantial player in the field of multimodal AI, offering users an exceptionally comprehensive and sophisticated experience.

Applications in Business

GPT-4, handling eight times more words than its predecessors, is a versatile tool with broad applications across various business sectors. Beyond content creation, it excels in data mining, predictive analytics, contextualizing data through charts, summarizing PDFs and text, website creation from images, contract analysis, and more. Its capabilities extend to generating rich content, including fiction and music, and it finds applications in marketing, sales, translation services, eLearning, software development, big data analytics, international trade, customer experience, product management, cybersecurity, and financial transactions. Despite the AI evolution potential, challenges include the possible replacement of roles and concerns about code quality. Notable implementations include Stripe using GPT-4 to enhance its platform and assist developers. The diverse ways GPT-4 can be integrated into business operations underscore its capacity to revolutionize various industries.

Key Features and Advantages

- Multilingual input processing allows it to understand data and contexts in multiple languages.

- Its advanced image recognition feature can interpret complex visual cues and provide detailed answers.

- GPT-4’s steerability feature allows users to change its behaviour on demand, providing system messages for task-setting and specific instructions. For example, it can offer teachers recommendations on effective communication and suggest questions for class discussions.

C. Inworld AI

Inworld AI, the character engine, hands developers the reins to breathe life into non-playable characters (NPCs) in digital realms. Thanks to multimodal AI, Inworld AI lets these NPCs engage in conversations using natural language, voice, animations, and emotions. Imagine this: developers can whip up smart NPCs with a mind of their own—autonomous actions, distinct personalities, emotional expressions, and even memories of past events. It’s all about cranking up the immersion level in digital experiences.

Applications in Business

In the business domain, Inworld AI opens up a myriad of possibilities. Developers can leverage this character engine to fashion realistic non-playable characters (NPCs) for diverse digital scenarios. These intelligent NPCs, fueled by multimodal AI, excel in seamless communication through natural language, voice, animations, and emotions. The practical applications are diverse, from elevating customer engagement in virtual settings to crafting interactive training simulations featuring emotionally responsive characters. Businesses can harness Inworld AI to create virtual personalities for customer service bots, interactive training modules, or even virtual brand representatives. The outcome? A heightened level of immersion and interaction, translates into more engaging and effective digital experiences for businesses and their users.

Key Features and Advantages

- Tailor personalities, memories, and cognitive abilities as per your preferences.

- Define custom triggers, set specific goals, and orchestrate desired actions.

- Embrace multilingual capabilities with text-to-speech, automatic speech recognition, and a variety of expressive voices.

- Achieve seamless synchronization of gestures and animations for a more lifelike experience.

- Enjoy avatar flexibility; bring your own character design into the mix.

D. Meta ImageBind

Meta ImageBind, as an open-source multimodal AI model, sets itself apart by processing diverse data types, including text, audio, visual, movement, thermal, and depth data. What makes it unique is its ability to combine information across all six modalities, allowing it to create art by merging disparate inputs. For instance, it can seamlessly blend the audio of a car engine with an image of a beach, showcasing its versatility in handling complex and varied data sources.

Applications in Business

Meta ImageBind’s capacity to process diverse data types—text, audio, visual, movement, thermal, and depth data—finds practical application across multiple business sectors. In content creation and marketing, it excels at crafting engaging multimedia content and visually striking advertisements by merging various modalities. Industries focused on artistic expression and design benefit from ImageBind’s support in creating innovative and visually compelling designs. For product development and prototyping, the model accelerates processes by providing a comprehensive understanding of user needs through the incorporation of multimodal data. Its relevance extends to entertainment, healthcare, training simulations, research and development, and user interface design, making it a valuable asset in enhancing user experiences and revolutionizing industries. The ability to seamlessly merge information across multiple modalities positions Meta ImageBind as a versatile tool with practical and innovative solutions for a wide range of business applications.

Key Features and Advantages

- Processes diverse data types: text, image/video, audio, 3D depth, thermal, and IMU.

- Learns a unified embedding space, linking objects in photos with sounds, shapes, temperatures, and movements.

- Surpasses specialist models, collectively analyzing diverse information.

- Enhances Make-A-Scene for creating images from audio inputs.

- Expands multimodal search functions for accurate content recognition and moderation.

- Enables cross-modal retrieval and audio-to-image generation.

- Exhibits scalable performance, improving with vision model strength.

- Outperforms in audio, depth tasks, and emergent zero-shot recognition.

- Opens avenues for richer human-centric AI, exploring new modalities.

E. Runway Gen-2

Runway Gen-2 stands out as a versatile multimodal AI model, specializing in video generation. Users can input text, images, or videos, harnessing its capabilities for creating original video content through text-to-video, image-to-video, and video-to-video functionalities. Whether replicating the style of existing images or prompts, editing video content, or aiming for higher fidelity results, Gen-2 proves to be an ideal choice for those engaged in creative experimentation.

Applications in Business

Runway Gen-2 presents diverse business applications, notably in content creation and marketing, where its text-to-video, image-to-video, and video-to-video functionalities prove valuable. Creative fields benefit from its role in experimentation and original content creation. Video editing services find utility in replicating styles and achieving higher fidelity results. For social media marketing, educational content creation, product demonstrations, brand promotion, and advertising campaigns, Gen-2 offers a versatile tool. In the entertainment industry, it supports engaging video content creation, while businesses utilizing virtual events or presentations enhance their visual storytelling through Gen-2’s capabilities.

Key Features and Advantages

- Converts any picture, including those generated on models like Midjourney, into videos using tools like Runway Motion Brush.

- iOS app available for convenient multimedia generation on smartphones.

- Allows users to create new videos with simple text prompts.

- Free accounts can generate four-second videos for download and share on any platform, albeit with a watermark.

Future Trends and Implications of Multimodal AI in Business

Multimodal AI, blending text, images, and speech, is a game-changer in artificial intelligence. It improves how machines and humans interact, impacting healthcare, education, entertainment, and business. This tech understands the world better, making decisions and problem-solving smarter. It’s becoming more accessible and efficient, promising a future with smarter technology. Industries are catching on, expecting a surge in multimodal AI development.

Yet, businesses adopting multimodal AI face challenges. It needs hefty infrastructure, making it harder for smaller companies. Ethical concerns about privacy and bias are critical, demanding careful handling. The need for skilled professionals in AI and industries adds complexity. Success with multimodal AI requires businesses to invest in ethics and workforce development, ensuring they can make the most of it while overcoming these challenges.

Featured Resource: A Guide To Large Language Models(LLMs) For Enterprises

Conclusion

In a nutshell, multimodal AI is the future – no doubts there. The blend of text, images, and audio in AI systems brings a human-like understanding, making them more accurate and efficient.

Stay in the Loop with Top Multimodal AI Models:

Keeping up with the latest multimodal AI models is key. As tech evolves, new models emerge, boosting performance and capabilities. Staying informed allows professionals to maximize the potential of multimodal AI, leading to better decision-making and innovation.

The future of multimodal AI in business looks promising. From enhancing customer experience to streamlining internal processes, it’s set to transform industries. Businesses embracing these changes can gain a competitive edge, driving innovation and efficiency. As multimodal AI advances, its applications in business will diversify, offering new growth opportunities.

DaveAI recently launched GRYD- A GenAI middleware hub that empowers enterprises to securely deploy cutting-edge AI experiences. GRYD is Multimodal, Self Sufficient, and Secure. With 10+ large AI model integrations, 15 million parameters trained SLMs, and a strong commitment to 100% data security and control, GRYD signifies a leap in AI innovation. Click here to know more: GRYD