Introduction

The way humans interact with AI is rapidly evolving. Initially, the focus was on prompt engineering—learning how to craft questions, statements, or instructions to extract desired outputs from models like ChatGPT or Claude. However, as large language models (LLMs) and multimodal AI systems grow in complexity and capability, a new frontier is emerging: context engineering.

While prompt engineering involves crafting inputs, context engineering involves designing the entire information ecosystem surrounding those inputs. It encompasses curating data, managing memory systems, integrating tools, and dynamically shaping the environment in which AI operates. In essence, prompt engineering is like speaking to AI; context engineering is like teaching it how to think.

This comprehensive guide explores how context engineering is reshaping AI interaction beyond prompting and why it’s gaining traction in enterprise use cases. We’ll delve deep into definitions, techniques, tools, and future trends to help you understand the shift from isolated instructions to orchestrated intelligence.

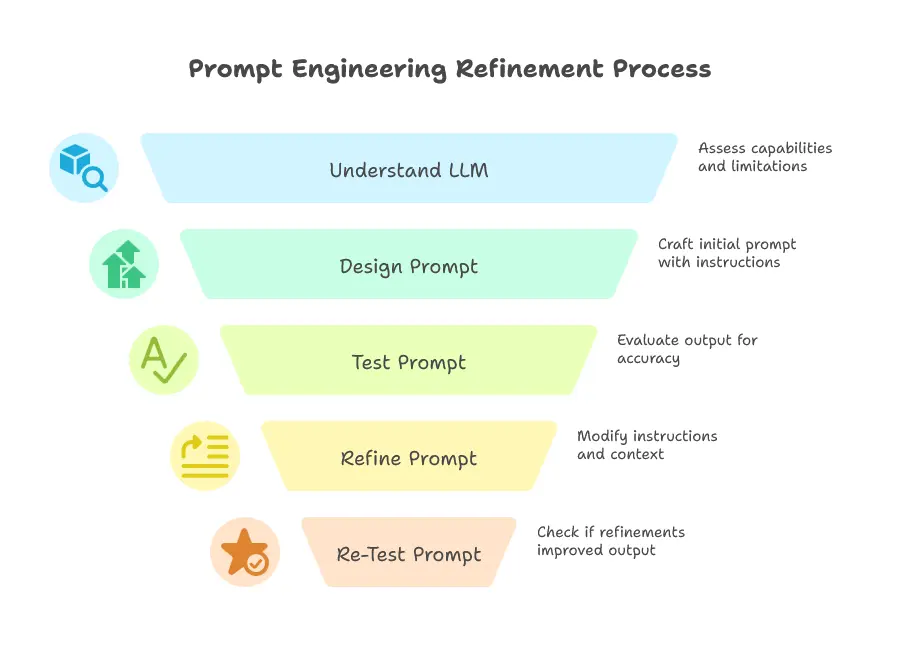

What is Prompt Engineering?

Prompt engineering refers to the practice of crafting and refining textual prompts to guide AI models to generate desired responses. It became popular with the rise of foundation models such as GPT-3, GPT-4, and Claude.

Core Principles:

- Clarity: Clear, specific prompts often lead to better results.

- Structure: Using structured formats like bullet points or templates improves consistency.

- Role Assignment: Assigning roles (“You are a helpful assistant…”) shapes model behavior.

- Few-shot & Zero-shot learning: Including examples (few-shot) or relying on model generalization (zero-shot).

Common Prompt Engineering Techniques

- Chain-of-thought prompting

- Self-consistency

- ReAct (Reason + Act)

- Tree of Thoughts

Limitations:

- Scalability: Writing prompts for each use case is time-consuming.

- Memory Constraints: Models forget prior interactions.

- Lack of context awareness: Prompts don’t account for evolving user needs or external tools.

Learn more about prompt engineering techniques.

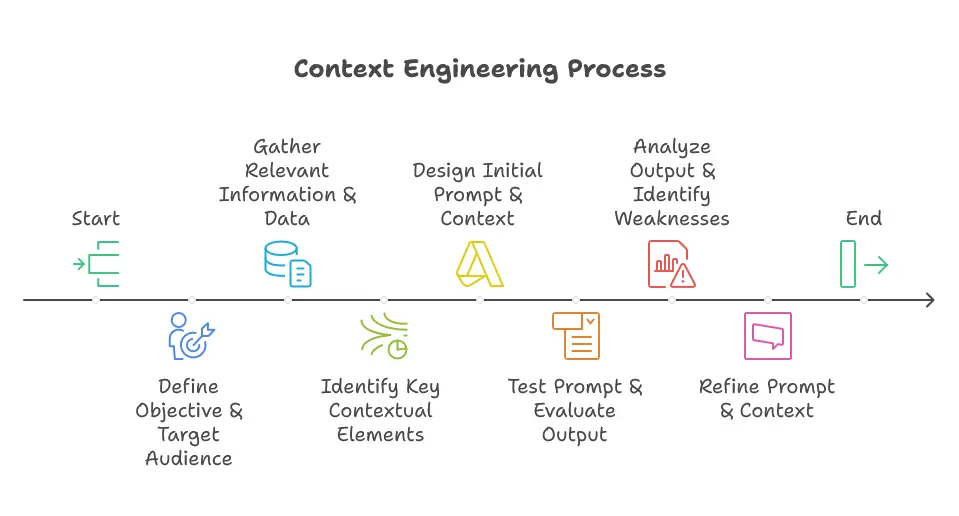

Understanding Context Engineering

Context engineering is the practice of designing and maintaining the entire ecosystem in which an AI model operates—including relevant data, memory, tools, and workflows—to deliver intelligent, dynamic, and personalized experiences.

“Context Engineering is the new skill in AI. It is about providing the right information and tools, in the right format, at the right time.”

Key Components:

1. Data Curation

- Filtering relevant datasets

- Formatting structured and unstructured data

- Adding metadata and embeddings for semantic search

2. Memory Management

- Implementing short-term and long-term memory

- Using vector databases like Pinecone or Weaviate

- Retrieval-augmented generation (RAG)

3. Tool Integration

- Connecting APIs, databases, and software tools

- Designing orchestrators (LangChain, LlamaIndex)

- Contextual routing based on user intent

Context engineering enables a more autonomous, multi-turn, and tool-augmented AI that adapts to the user’s evolving needs.

Explore AI context optimization on Hugging Face.

Key Differences: Context Engineering vs Prompt Engineering

| Feature | Prompt Engineering | Context Engineering |

| Focus | Input phrasing | System-level design |

| Scope | One-time interaction | Ongoing, multi-turn interaction |

| Flexibility | Static | Dynamic & adaptive |

| Techniques | Text prompts, few-shot examples | Tool integration, memory, orchestration |

| Use Cases | Text generation, Q&A | Enterprise workflows, copilots, agents |

| ROI | High initial effort | Higher long-term scalability |

While prompt engineering is excellent for prototyping or consumer-facing apps, context engineering is essential for enterprise-grade AI that needs to scale across departments, use cases, and data sources.

Context Engineering Components

1. Data Curation Strategies

- ETL Pipelines: Extract, transform, load data into vector DBs

- Embedding Models: Use OpenAI, Cohere, or BAAI for semantic embeddings

- Metadata Tagging: Improve filtering and retrieval

2. Memory Systems and Retrieval

- Short-Term Memory: Per-session context

- Long-Term Memory: Persisted across sessions

- Retrieval Systems: Pinecone, Chroma, FAISS

3. Tool Integration Frameworks

- LangChain: Orchestration of LLMs, tools, and memory

- LlamaIndex: Ingestion pipelines for data-aware applications

- Open Agents: Dynamic selection of tools

4. Real-World Implementation Examples

- Customer Support Agents with CRM & ticket access

- HR Assistants fetching policy documents

- Sales Copilots using product catalog & lead data

Practical Examples & Case Studies

Enterprise Applications

- Retail: AI avatars guiding customers through 3D catalogs using context-aware scripts

- BFSI: Tax assistants with memory of previous filings, accessing government APIs

- Automotive: Scheduling test drives with personalized recommendations based on past queries

Development Workflows

- Defining contexts through JSON/YAML

- Using vector databases for knowledge injection

- Implementing fallback chains for tool unavailability

Performance Comparisons

| Metric | Prompt Engineering | Context Engineering |

| Session Recall | Low | High |

| Personalization | Manual | Automated |

| Error Reduction | Moderate | High |

| Tool Access | Limited | Seamless |

Tools and Technologies for Context Engineering

Platforms and Frameworks

- LangChain: Tool and agent orchestration

- LlamaIndex: Data ingestion and context-aware queries

- Pinecone / Weaviate: Vector search infrastructure

- GPT-4 + Tools API: Native tool calling and memory features

Integration Methods

- Webhooks & APIs

- Plugin frameworks (OpenAI, Claude)

- Retrieval-Augmented Generation (RAG)

Best Practices

- Use hybrid retrieval (structured + unstructured)

- Set up memory checkpoints for long interactions

- Build fallback logic to improve reliability

See context engineering tools documentation for more.

Future of AI Interaction

Industry Trends and Predictions

- Autonomous agents with deep context awareness

- Enterprise copilots driving productivity

- Context-as-a-Service platforms

Emerging Technologies

- Semantic context ranking

- Multi-modal context fusion (text + vision + audio)

- Standardization of context formats

Career Implications

- Rise in demand for context engineers

- Hybrid skillset: data + LLM + integration

- Context engineering will be core to enterprise AI roles

Final Thoughts

As AI becomes more integrated into our workflows, the focus is shifting from crafting smart prompts to designing smart environments. Whether you’re a developer, data architect, or AI strategist, now is the time to start learning how to engineer the context—because in the age of LLMs, the prompt is just the beginning.

FAQs

What is context engineering in AI?

Context engineering is the design of an AI’s operational environment, including memory, tools, and curated data, to enable intelligent responses beyond simple prompts.

How is prompt engineering different from context engineering?

Prompt engineering focuses on input phrasing. Context engineering focuses on the entire AI ecosystem.

Do I need to know coding for context engineering?

Basic scripting is useful, especially in Python. Tools like LangChain and LlamaIndex make integration easier.

Is context engineering better than prompt engineering?

For scalable and dynamic enterprise applications, yes. For small tasks or one-off queries, prompt engineering might suffice.